Marketers tend to pride themselves on three things: creativity, originality, and trendiness. They like shiny new objects, and they want to be first-to-market.

When generative AI was instantly thrust into the spotlight in November, 2022, it set off alarm bells. Creatives had thought they were immune to automation – surely the ‘three things’ could only be achieved by humans? They suddenly felt vulnerable. What were marketers to do?

The first reaction was “it’s the new trend, let’s dive in and use the tech.” It set off a wave of interest in other gen-AI programs in disciplines such as images, audio and video. Learning to come up with effective prompts became an early imperative. There was plenty of handwringing on the potential for job losses, leading to mantras about combining human ingenuity with technology for maximum effect.

The hype cycle peaked around mid-2024. The technology was improving by leaps and bounds, but the market was flooded with too many gen-AI products, the ‘fear factor’ had settled down, and a key question was being asked: Is gen AI a solution in search of a problem?

It’s the flip side of a typical launch point for entrepreneurs, who aim to ideate, produce and sell solutions to common problems. Many of the popular tasks for which gen AI was often used were administrative in nature, or to supplement research, writing or customer service (in the form of chatbots, for example). Relying too heavily on the technology without the requisite safeguards had the potential to create problems rather than solve them.

There were cases such as these:

A small-claims adjudicator in Canada ordered an airline to pay a grieving passenger who argued they were misled by the company’s chatbot into purchasing full-price tickets.

One of the world’s top tech companies suspended use of an AI service after an automated alert incorrectly told some readers that Luigi Mangione, suspect in the fatal shooting of the UnitedHealthCare CEO, had shot himself and attributed it to a news outlet.

The CEO of a networking-tool startup apologized after AI-generated emails to users commented on their LinkedIn profile photos, appearing to be written in the style of Donald Trump, with a marked contrast between messages sent to women and those targeted to men.

What we’re witnessing is a push and pull between the instincts to be creative, original and trendy, and the importance of brand protection. The mistakes are cautionary tales. Trust is paramount.

The Globe and Mail assembled an AI Council in May, 2023, to table legal and ethical issues, and to set up a process for teams to make resource and tool requests. Within a month the newsroom published a memo on its initial guidelines, for public transparency and to assist others developing their own approaches.

My instinct as a marketer was to push the boundaries. From the get-go, I considered several ways to use gen AI to save time and money, I wanted to start testing the top platforms, and I wanted to post results in market sooner than later. The first few years were a trying time. It seemed like I was caught up in a lot of talking, not enough acting.

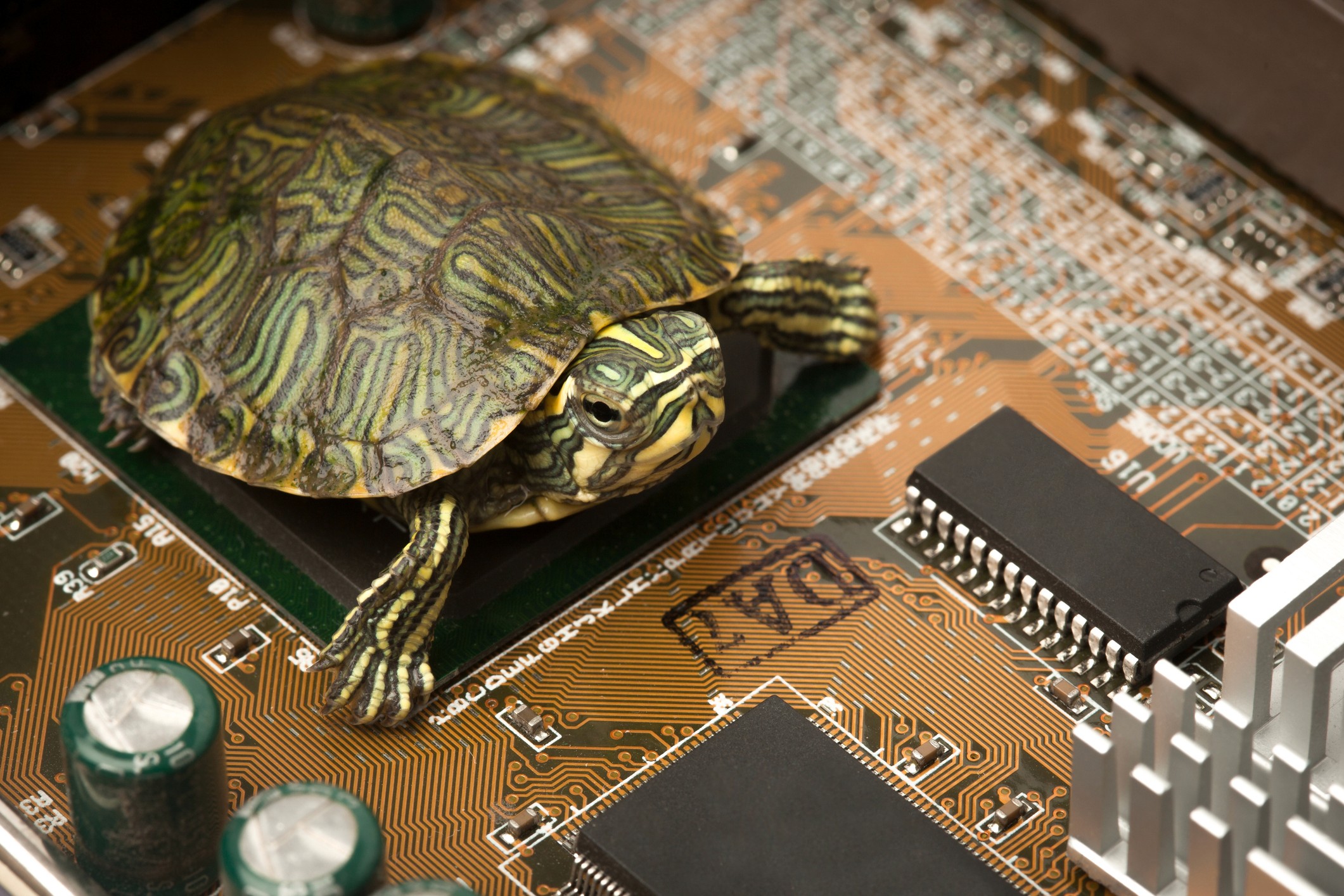

I later came to realize that “slow but steady wins the race” is a fitting phrase when it comes to using gen AI in a ‘live’ environment. No matter how many checks and balances you put into place, AI can ‘hallucinate,’ or produce made-up information. When you ‘feed the beasts’ – add your original material to third-party gen-AI platforms – you are training their models and could be subjecting your work to unknown uses. Gen-AI outputs can contain copyrighted or trademarked elements. You also have to be mindful of potential bias and discriminatory outcomes.

Extensive testing is warranted, whether you’re building or licensing gen-AI programs. Humans should still be checking and recording relevancy and accuracy throughout the process. To achieve a level of full transparency, it’s good practice to use disclaimers any time AI plays a role in production.

I learned all this and more during the process of bringing The Globe’s Climate Exchange to market. Readers were offered the opportunity to pose questions about climate change, which the newsroom vetted and then assigned reporters to answer. Selected responses are now found with the help of a QA bot developed by The Globe that uses AI to match reader queries with the closest answer drafted.

What are some key takeaways for gen-AI projects?

Decide on the problem you are aiming to solve.

Assemble a working group, and consider representatives from legal, IT, UX/dev, data, HR, marketing and communications. Think of members as helpers, not barriers.

Conduct a thorough analysis of all related risks, knowing hallucinations likely cannot be eliminated.

Give prominence to disclaimers.

Use shared documents to set out guidelines for staff, including provisions for damage control.

Work completed or assisted by gen AI should only be public facing when your business is completely comfortable with the process and the expected outcomes.

I like the phrase ‘trust your instincts,’ but when it comes to gen AI in the marketing space, ‘control your instincts’ might be more appropriate.

Sean Stanleigh is head of Globe Content Studio, the content-marketing division of The Globe and Mail, a Canadian media company. Follow him at linkedin.com/in/seanstanleigh/